AI gets a bad wrap. To be clear, that’s generally for good reason—it’s either being put to nefarious use, is coming fer our jobs, or is being used as some marketing gimmick with no actual relation to genuine artificial intelligence. But the most impressive thing I saw in my time at CES 2025, and throughout the Nvidia RTX Blackwell Editor’s Day was AI-based. And it’s a tangible advancement on the AI us gamers likely use every day: DLSS.

DLSS—or Deep Learning Super Sampling, to give it its full title—is Nvidia’s upscaling technology used to deliver higher frame rates for our PC games (mostly because ray tracing sucked them down), and single-handedly kicked off the upscaling revolution that is now demanded of every single GPU and games console maker across the planet.

And its fourth iteration offers “by far the most ambitious and most powerful DLSS yet.” So says Nvidia’s deep learning guru, Brian Catanzaro. And the reason it’s such a massive deal is that it’s moving from using a US news network for its AI architecture to the technology behind Optimus Prime.

Yes, DLSS 4 is now powered by energon cubes. Wait, no, I think I’ve fundamentally misunderstood something vital. Hang on.

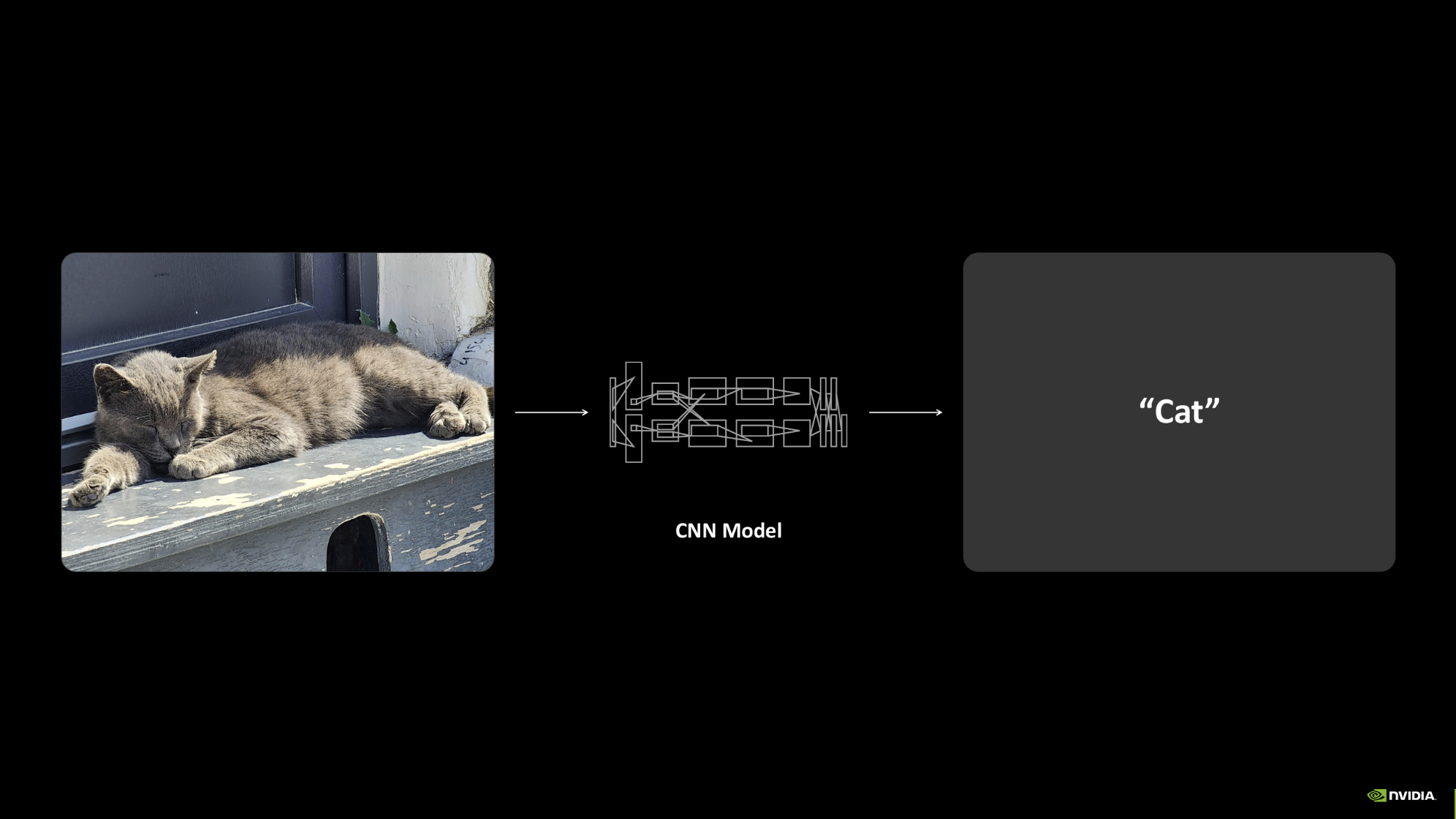

From the introduction of DLSS 2 in 2020, Nvidia’s upscaling has been using a technology called CNN, which actually stands for convolutional neural network (not Cable News Network, my apologies). CNN has gone hand-in-hand with GPUs since the early 2000s when it was shown that the parallel processing power of a graphics chip could hugely accelerate the performance of a convolutional neural network compared with a CPU. But the big breakthrough event was in 2012 when the AlexNet CNN architecture became the instant standard for AI image recognition.

Back then it was trained on a pair of GTX 580s, how times have changed…

At its most basic form, a CNN is designed to locally bunch pixels together in an image and analyse them in a branching structure, going from a low level to a higher level. This allows a CNN to summarise an image in a very computationally efficient way and, in the case of DLSS, display what it ‘thinks’ an image should look like based on all its aggregated pixels.

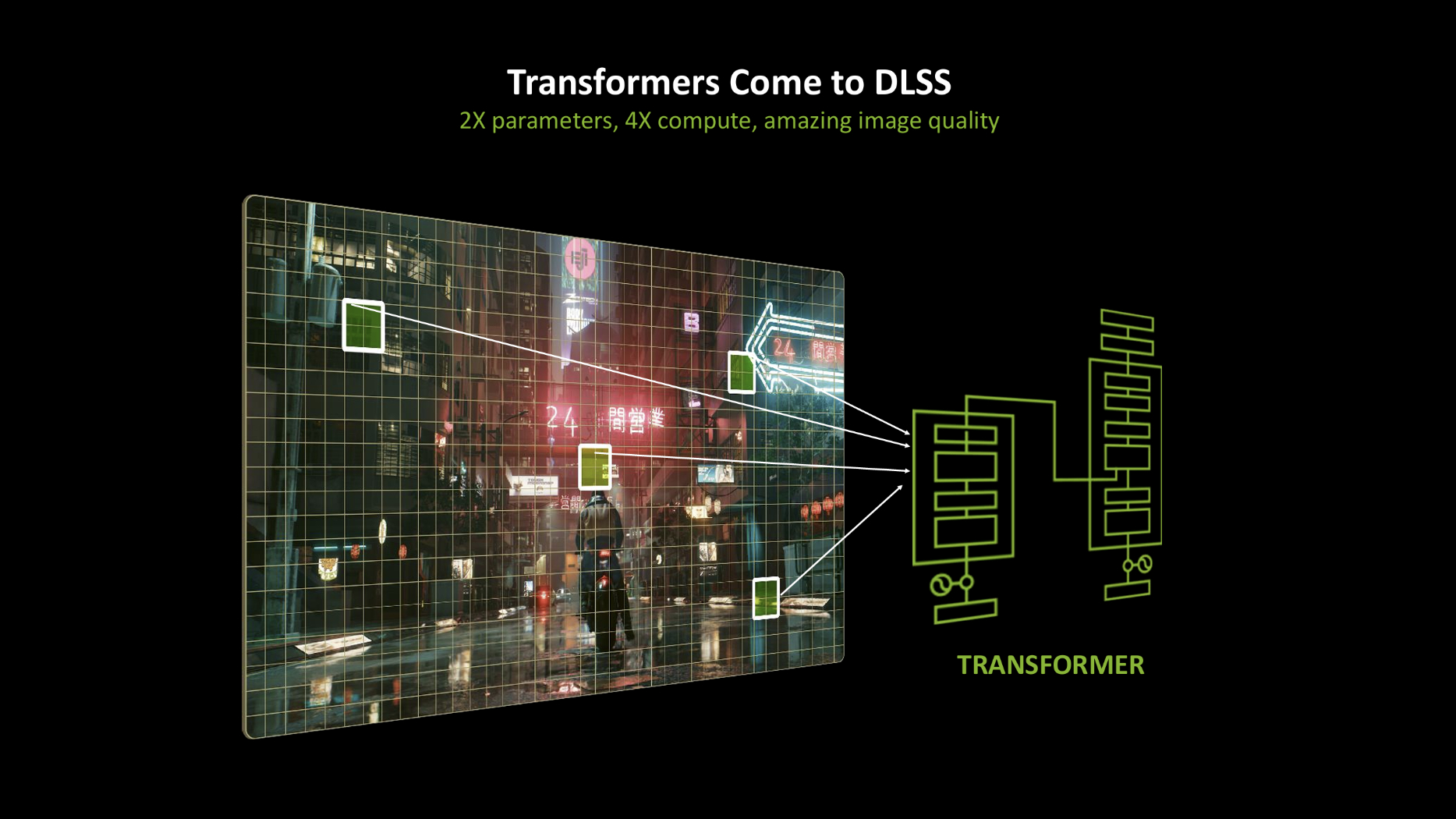

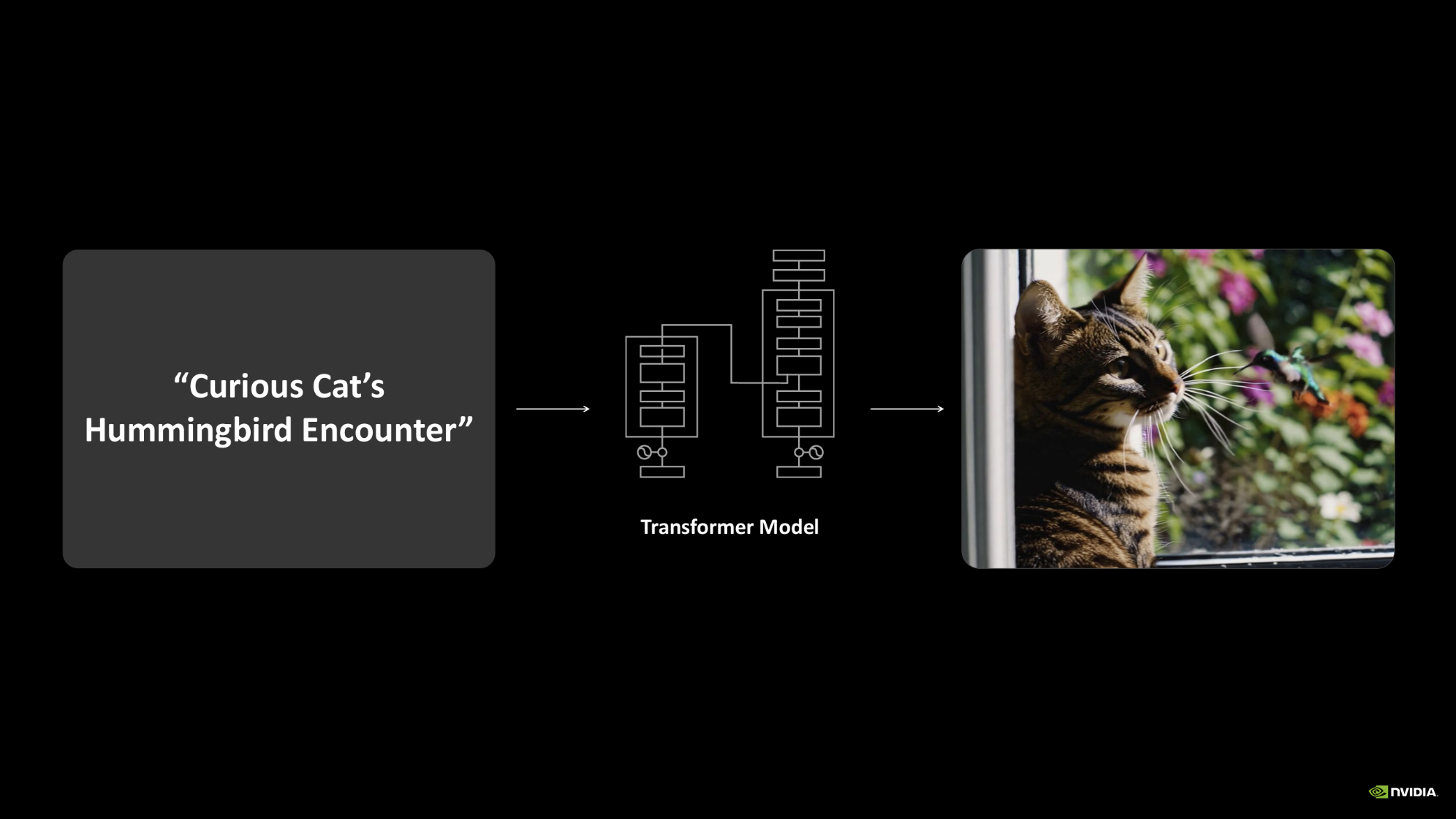

Convolutional neural networks, however, are no longer the cutting edge of artificial intelligence and deep learning. That is now something called the transformer. And this is the big switcheroo that has come out of CES 2025—DLSS will be powered by the transformer model going forward, and will deliver far better image quality, being far more accurate and stable… though that does come with a hit on gaming performance.

The transformer architecture was developed by the smart bods at Google, and is essentially the power behind the latest AI boom as it forms the heart of large language models, such as ChatGPT. In fact, the GPT part there stands for generative pre-trained transformer.

While CNN is more closely related to images, transformers are far more generalised. Their power is about where computational attention is directed.

“The idea behind transformer models,” Catanzaro tells us, “is that attention—how you spend your compute and how you analyse data—should be driven by the data itself. And so the neural network should learn how to direct its attention in order to look at the parts of the data that are most interesting or most useful to make decisions.

“And, when you think about DLSS, you can imagine that there are a lot of opportunities to use attention to make a neural graphics model smarter, because some parts of the image are inherently more challenging.”

Transformer models are also computationally efficient, and that has allowed Nvidia to increase the size of the models it uses for DLSS 4 because they can be trained on much larger data sets now, and “remember many more examples of things that they saw during training.”

Nvidia has also “dramatically increased the amount of compute that we put into our DLSS model,” says Catanzaro.” Our DLSS 4 models use four times as much compute as our previous DLSS models did.”

And it can make a huge difference in games that support DLSS 4. I think maybe the most obvious impact is in games which use Ray Reconstruction to improve the ray tracing and the denoising of a gameworld. I was hugely impressed with Ray Reconstruction when I first used it, and it still anchors characters in a world far better than previous solutions to ray traced lighting.

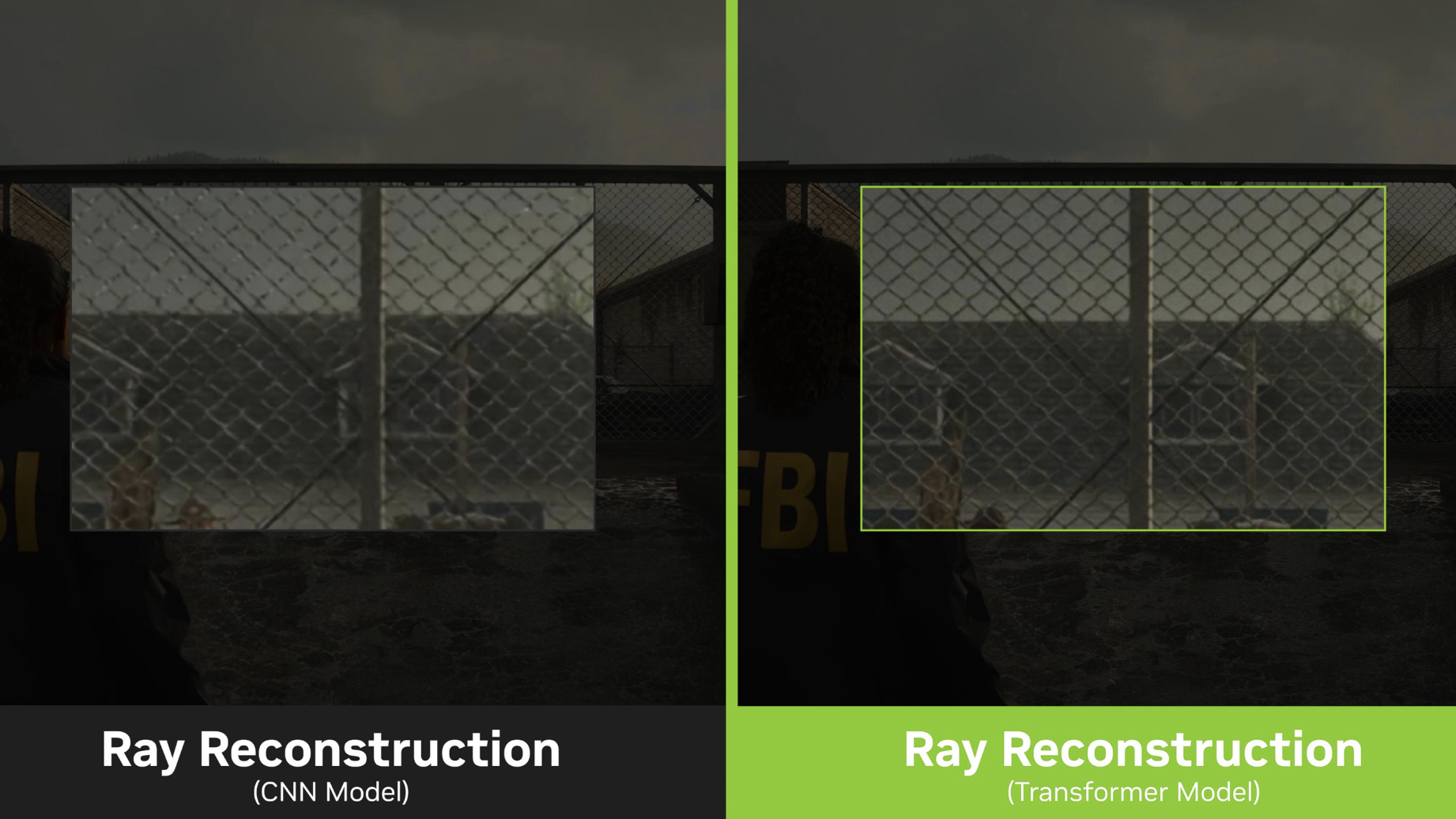

But you do get a noticeable smearing effect sometimes in games such as Cyberpunk 2077 and Alan Wake 2. With the smarts of the transformer model, however, that’s all gone. The improved neural network perceives scene elements more accurately than the CNN model, most especially those trickier scene elements, and most especially in darker environments.

Alan Wake 2 is the epitome of that, with its always brooding level design, and the example we were given on stage highlights a ceiling fan spinning around, leaving the familiar Ray Reconstruction smearing effect in the wake of its fan blades using the CNN model. With DLSS 4 and the transformer model, it just looks like a regular fan, with the dark ceiling behind the spinning fan now given clear detail.

You can also see how much more stable fine details are from this example of a chain-link fence, and you get the same effect with trailing overhead cables, etc.

I’ve also seen it used to great effect in Cyberpunk 2077, and in the RTX Remix version of Half-Life 2—which is still yet to be released but I got to have a play with it last week. I got to check out the demo of HL2 where we could toggle between the two DLSS models at will and the level of detail that suddenly pops out of the darkness with the transformer architecture in play is pretty astounding.

Not in a strange, ‘why is it highlighting that?’ kinda way, but more naturally. The way that even in the flickering gloom outside the ring of light around a flaming touch, set just outside of Ravenholm, you can make out a trail of leaves where before there were just muddy pixels.

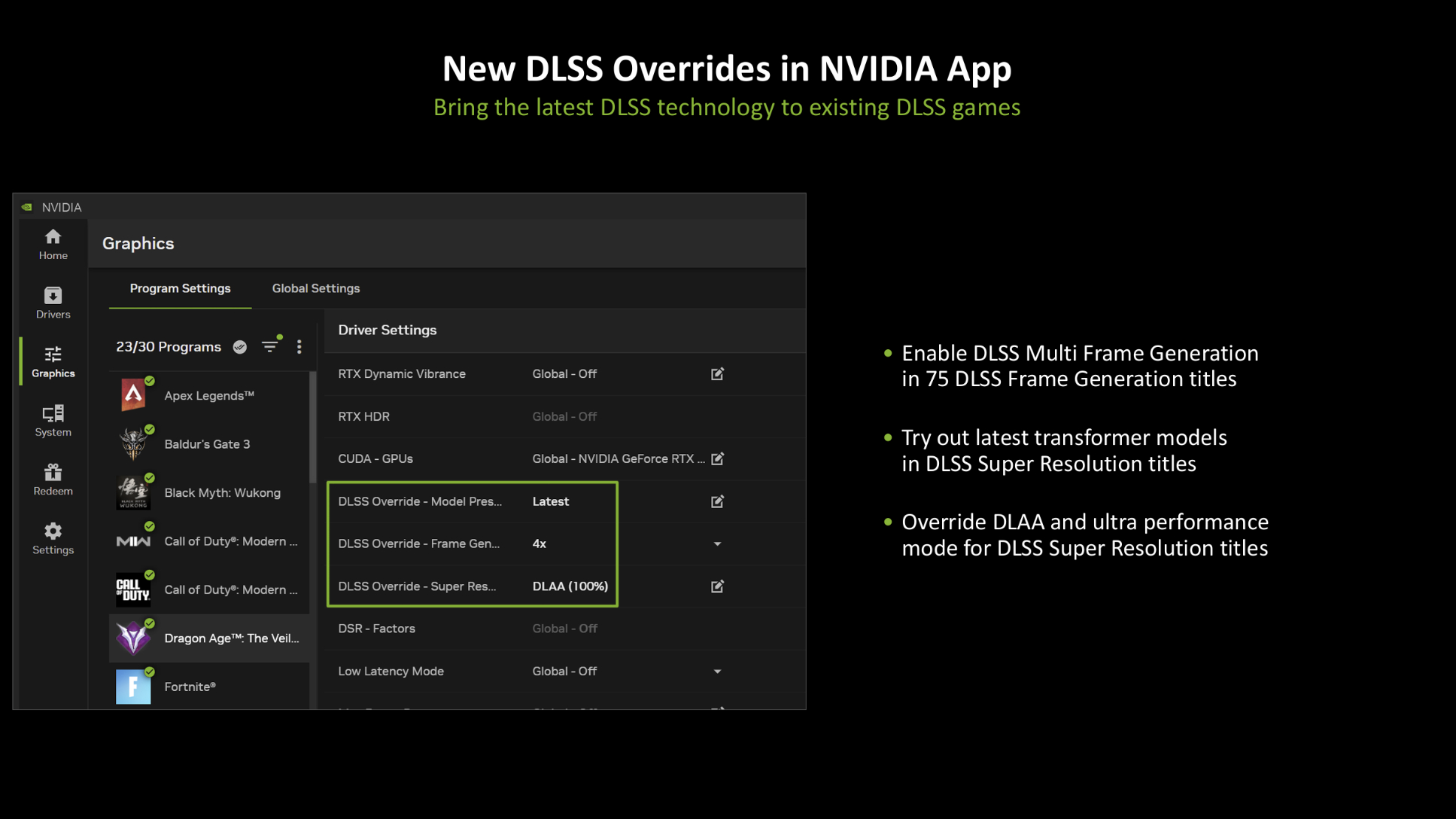

And that extra level of visual fidelity is coming to all games that support DLSS 4, which, thankfully, is every game which currently supports DLSS. There are going to be 75 games and apps at the launch of the RTX 50-series cards that support DLSS 4 out of the box, and they will either give you the option to switch between CNN and transformer models—this is what the build of Cyberpunk 2077 I’ve played with does—or it just switches over wholesale for DLSS 4.

But, via the Nvidia App, you’re also going to be able to override the DLSS version any game currently uses to essentially drop in the new transformer model to take advantage of the improved image quality. You will also be able to add Multi Frame Generation (MFG) into any game that currently supports Nvidia’s existing Frame Generation feature via this method, too. So long as you have an RTX Blackwell card, anyways…

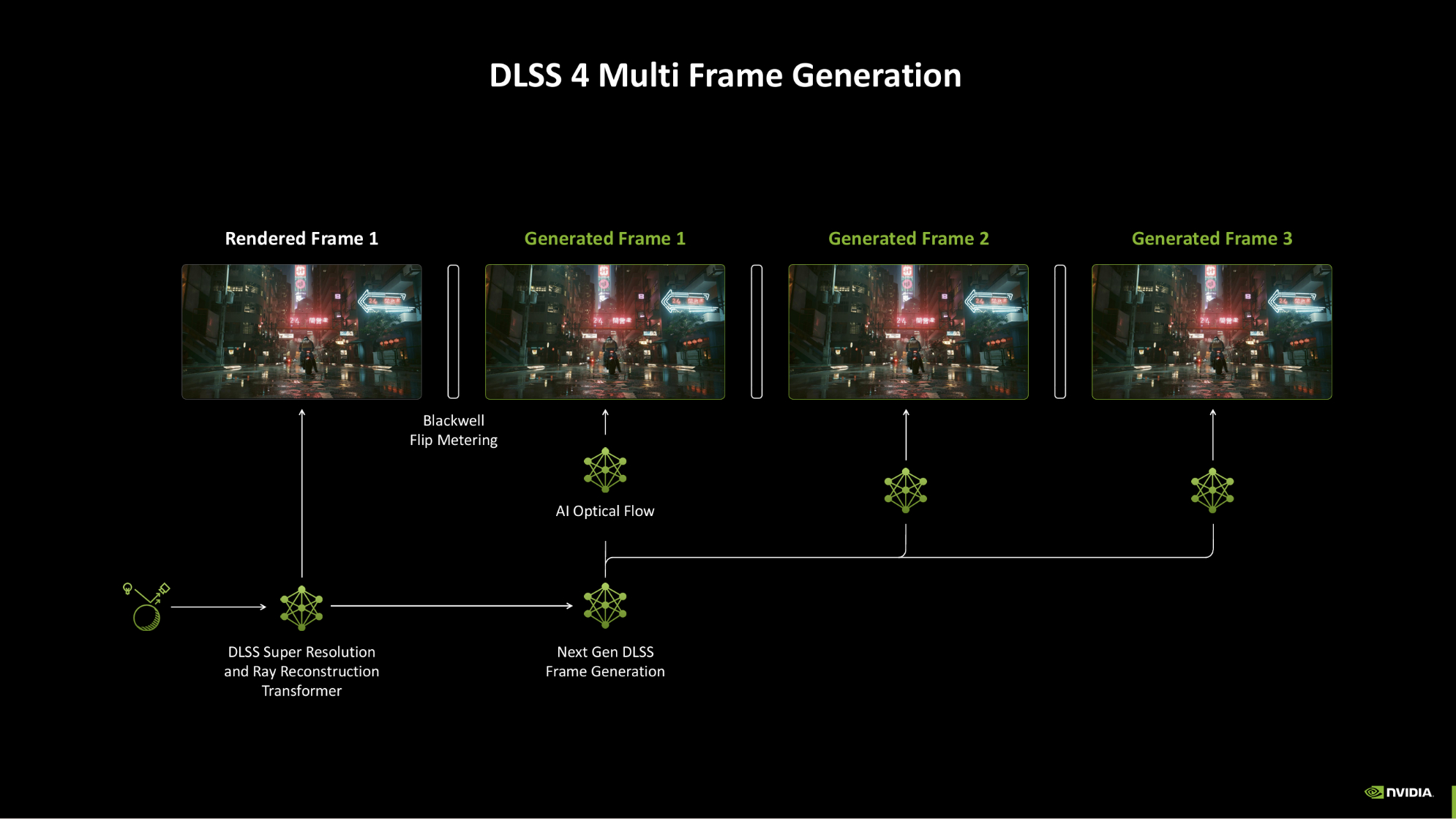

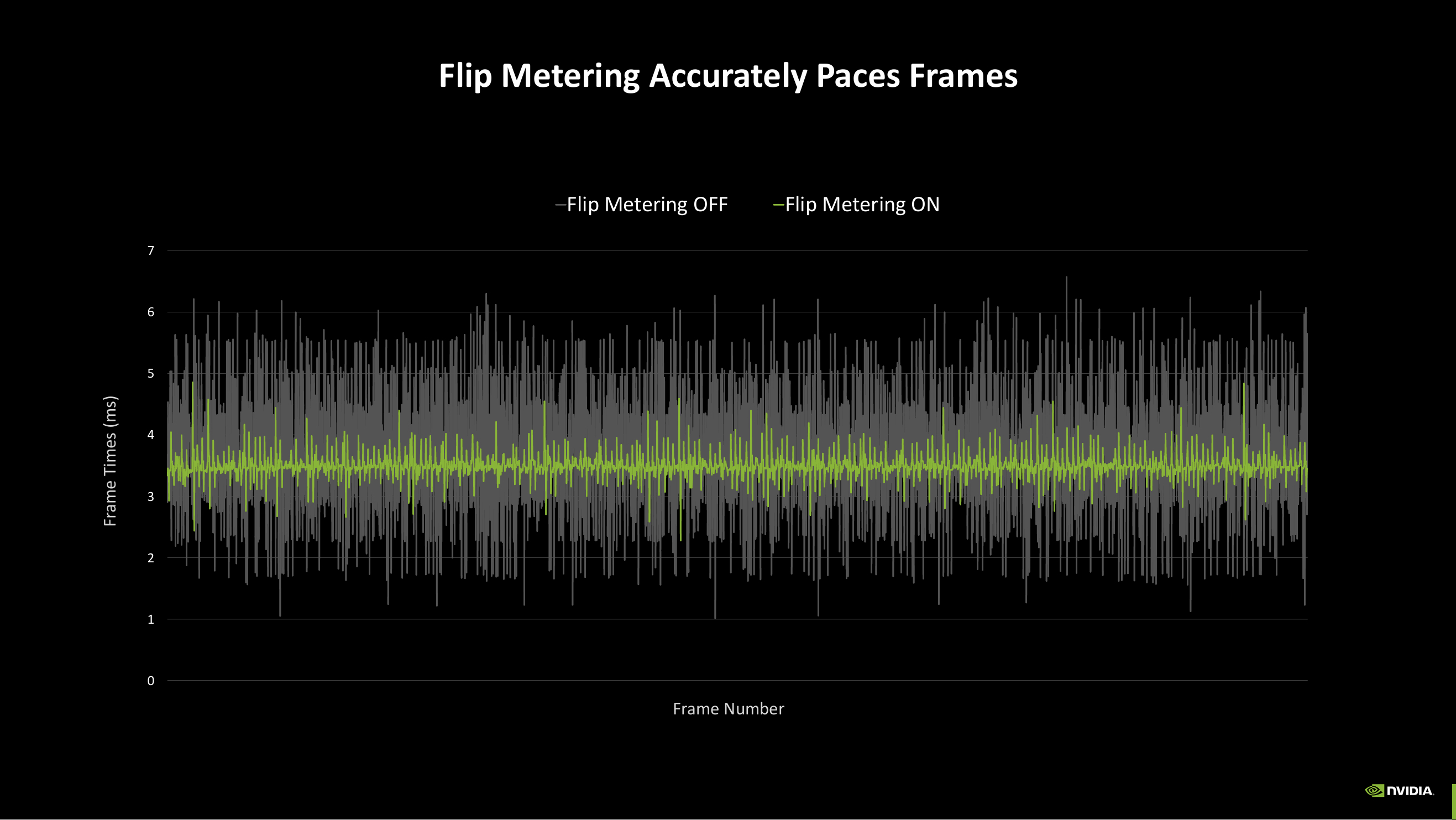

It is worth noting, however, that even though MFG is hardware-locked to Blackwell, the standard two-times Frame Generation benefits from an enhanced model, too. Nvidia says its new AI model is 40% faster and uses 30% less VRAM. It also no longer uses the optical flow hardware baked into the Ada architecture, as it’s been replaced by a more efficient AI model to do the same job. RTX 40-series cards can also now take advantage of the Flip Metering frame pacing tech—the same thing which locks MFG to RTX Blackwell—just without enhanced hardware in the display engine.

But DLSS 4’s transformer model for Ray Reconstruction and Super Resolution isn’t restricted to RTX 50-series or RTX 40-series cards, however, and is available to all RTX GPUs from Turing upwards. Which is a hell of a sell.

“One of the reasons this is possible,” says Catanzaro, “is because the way that we built DLSS 4 was to be as compatible as possible with DLSS 3. So, games that have integrated DLSS 3 and DLSS 3.5 can just fit right in.

“DLSS 4 has something for all RTX gamers.”

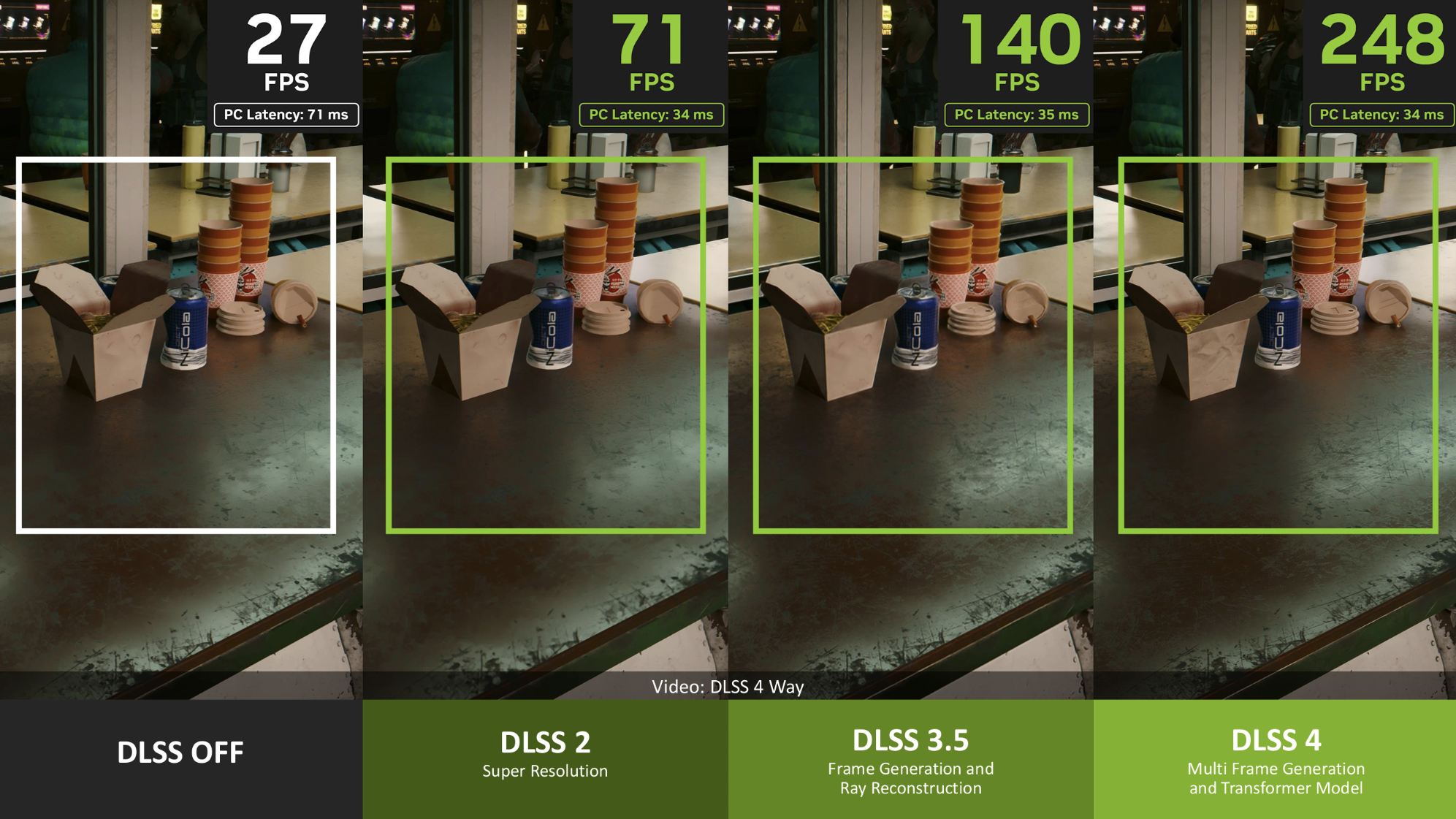

But there always has to be some sort of caveat. While it is supported by all RTX GPUs, the transformer model is more computationally intensive than the previous CNN incarnation. From speaking to Nvidia folk at the show they estimated potentially around 10% more expensive in terms of frame rates. So, if you just look at the raw frame rate data you will see DLSS 4 performing worse at the same DLSS levels compared with previous iterations.

What you won’t see there is the extra visual fidelity.

You could potentially offset that performance hit, however, by leaning on that extra fidelity to drop down a DLSS tier, from Quality to Balanced, for example, and that might well give you better performance and maybe even slightly improved visuals. But we will, however, have to check that out for ourselves when DLSS 4 launches out of beta in full.

I for one am almost looking forward more to the DLSS 4 launch than I am to getting my hands on the svelte new RTX 5090. Though I will say, its Multi Frame Generation does have to be seen to be believed.