AMD has finally taken the wraps off of its long-awaited RDNA 4 graphics cards, the RX 9070 and RX 9070 XT. And, to be honest, it feels like a long time coming. Details have been thin on the ground after a somewhat chaotic half-reveal during CES 2025, but now we’ve finally received hard details as to what these cards are made of—and a closer look at the shiny new architecture inside.

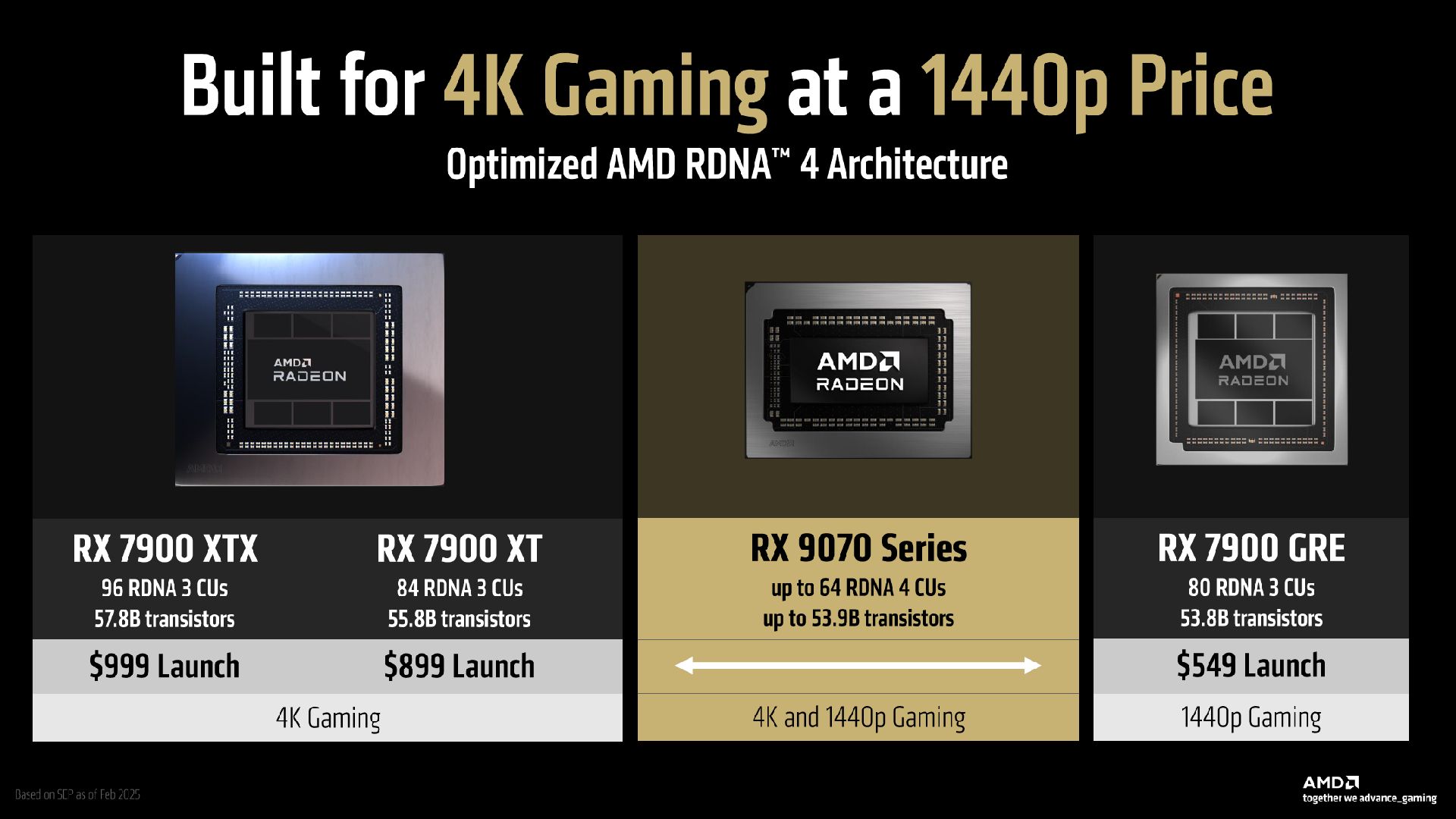

Well, sort of shiny and new, at least. It’s probably easier to think of RDNA 4 as an evolution of RDNA 3 rather than a quantum leap forward in AMD’s GPU architecture, and that’s perhaps not a bad thing. AMD has confirmed once more that the launch is set for March 6, and has just confirmed that the RX 9070 will be priced at $549, and the RX 9070 XT will be $599.

While AMD has been keen to point out that these cards are not designed to compete at the upper end of the market, the pricing here is actually surprisingly competitive for modern mid-range cards, particularly if they’re as performant as AMD says. Anyway, on with the show.

RDNA 4 architecture

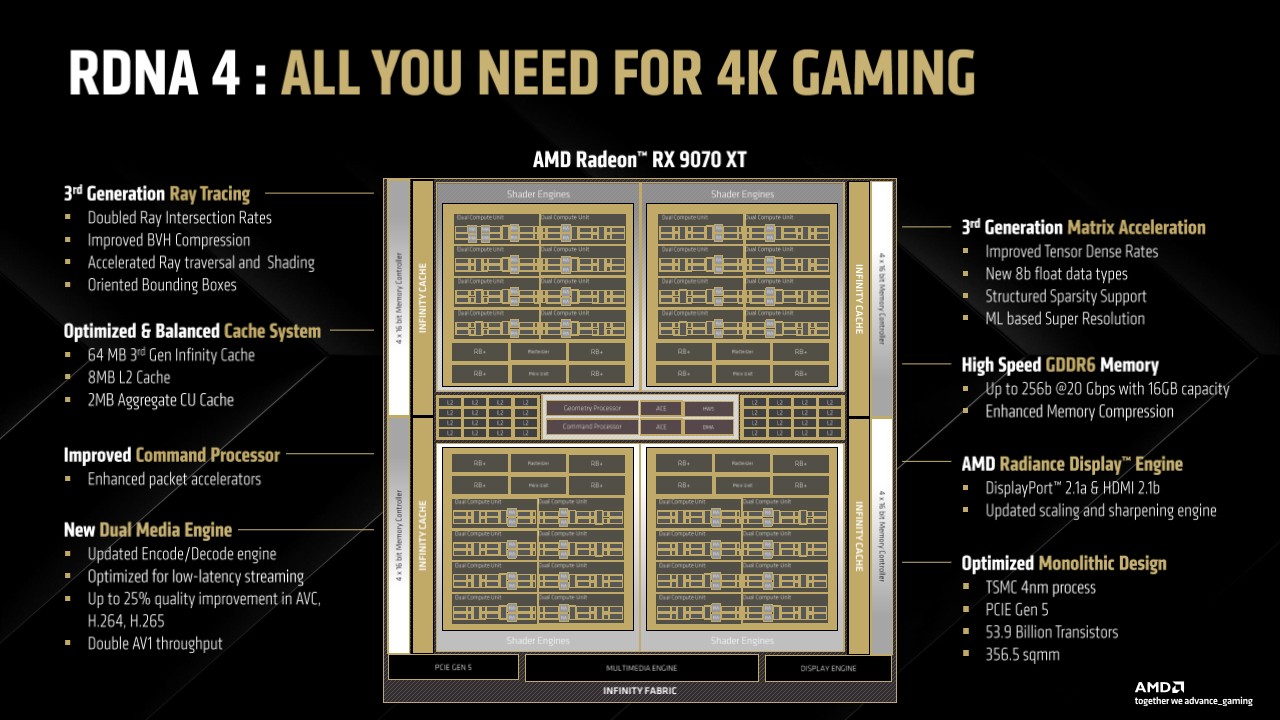

Both the RX 9070 and RX 9070 XT are built on TSMC’s 4nm process node, resulting in a 357 mm² die roughly the same size as the version used in the RX 7800 XT, but with almost double the transistors: 53.9 billion in total. However, even with the massively increased transistor count, there’s nothing that looks particularly out of the ordinary in the overall architectural view of the die itself—but it’s when you dive into the details of the Compute Units that things become interesting.

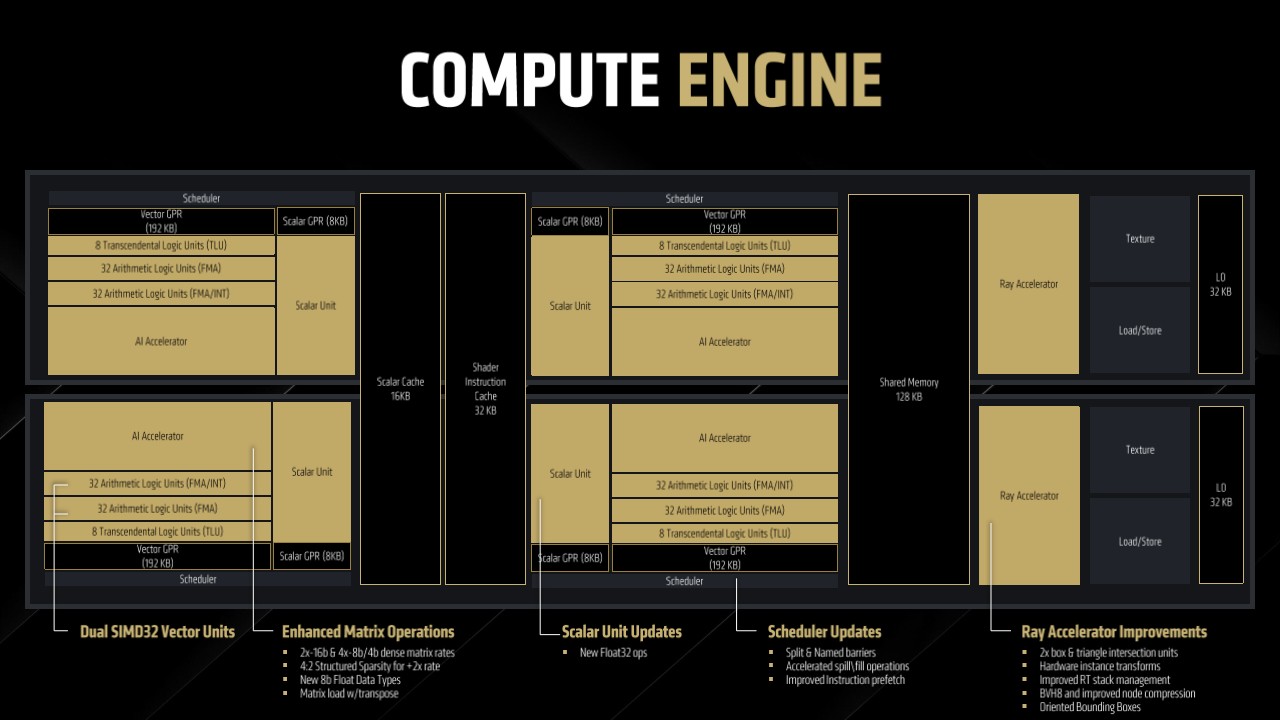

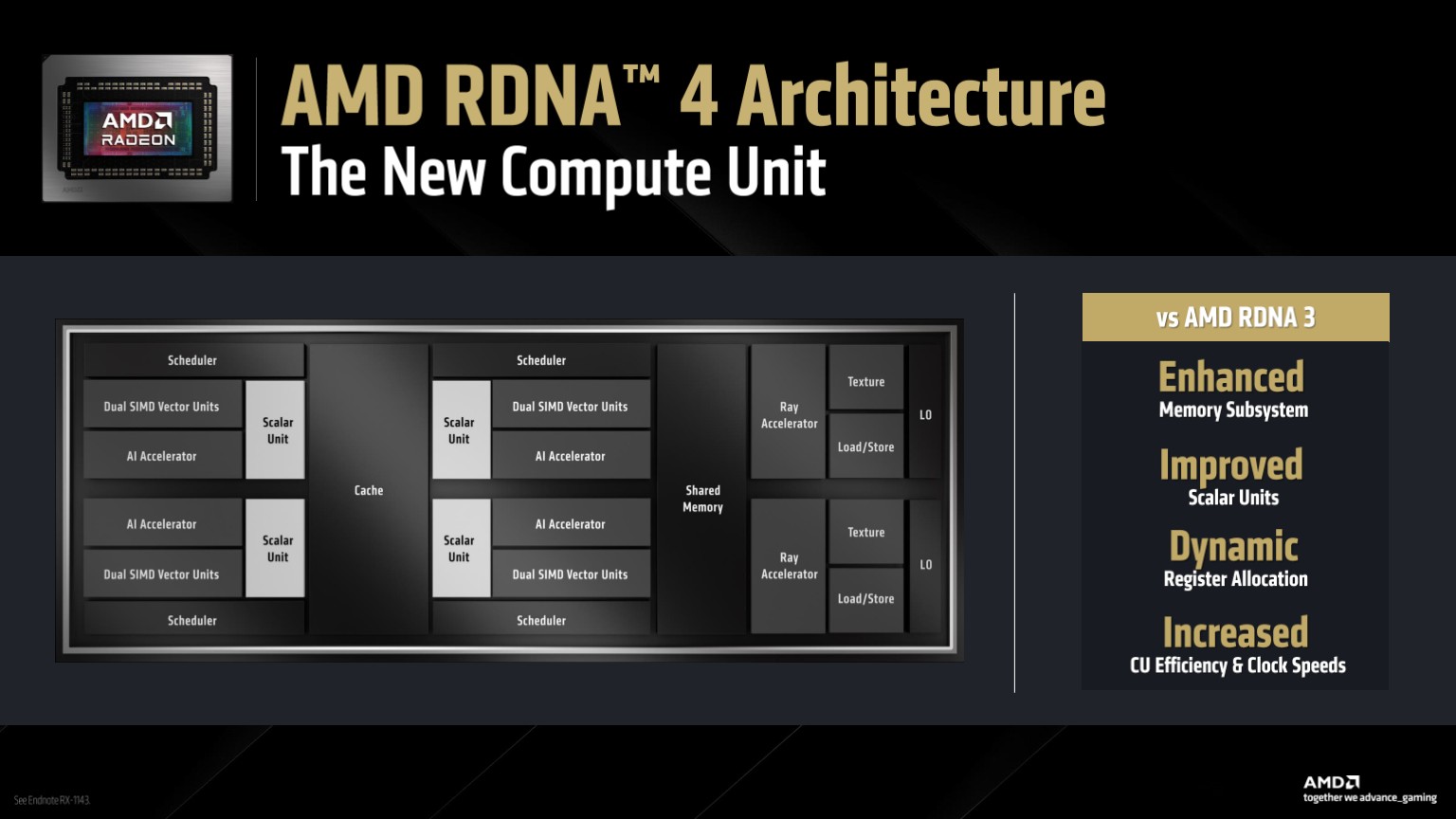

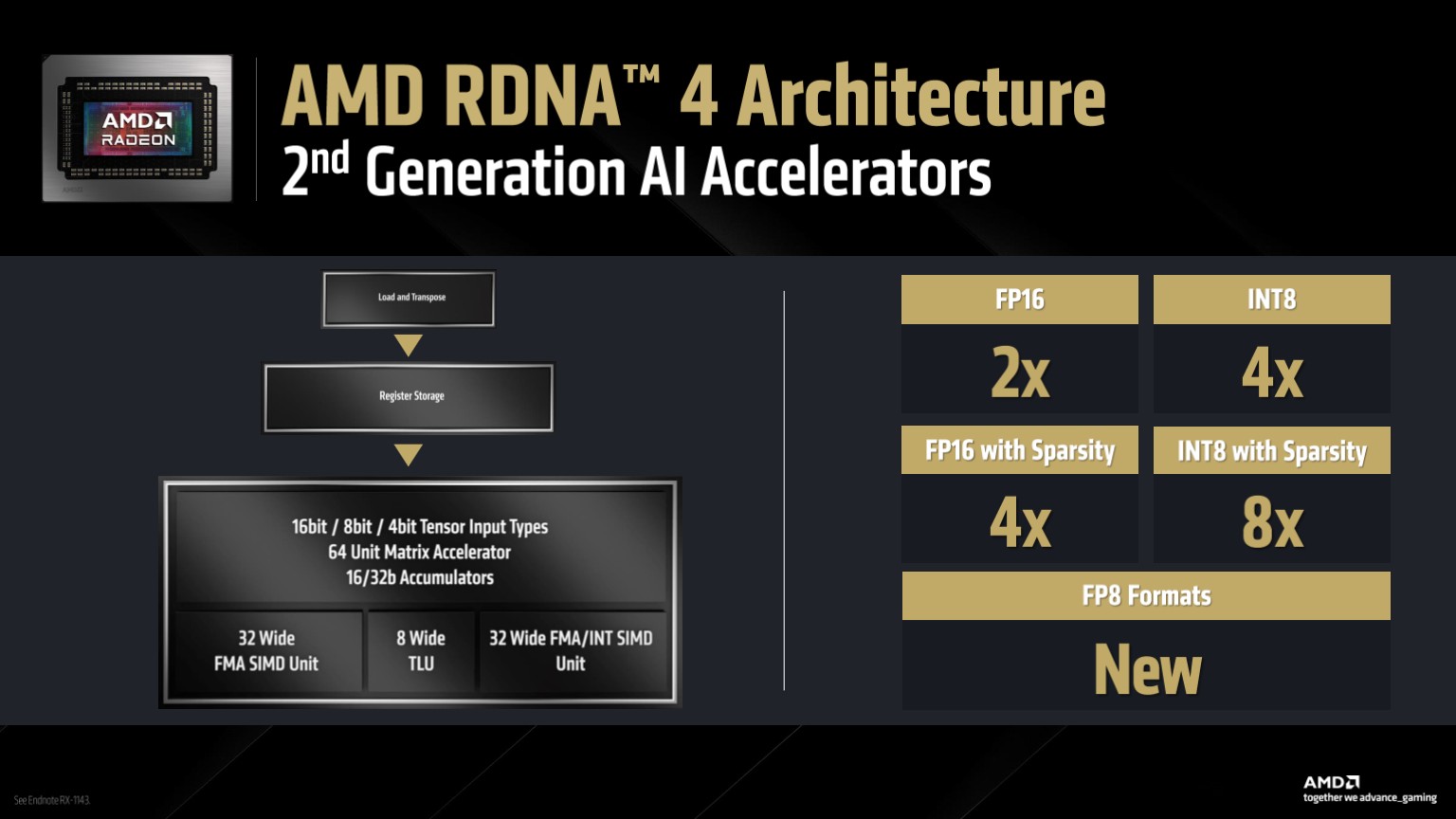

RDNA 4’s CUs initially look much the same as the RDNA 3 versions, with a few key efficiencies thrown into the pot. However, sitting next to each batch of ALUs and TLUs is now a second generation AI accelerator with support for FP8 calculations and enhanced matrix operations, alongside a faster memory subsystem, improved scalar units and dynamic register allocation, which AMD says all leads to increased efficiency per CU and much higher clock speeds compared to RDNA 3.

RDNA 4 also supports the reordering of shader instructions requesting memory, which should keep those new CUs busy.

Keep an eye on those AI accelerators. They’ll come into play later for the latest version of AMD’s upscaler, FSR 4, but I’m getting ahead of myself. Back to the down and dirty stuff.

The CUs are still paired into Dual Compute Units, as per RDNA 3. The RX 9070 XT gets eight per shader engine, with a grand total of 256 ALUs, 32 TLUs, four AI accelerators and 16 KB scalar cache across them, alongside 32 KB of shader instruction cache and 128 KB of shared memory per compute engine. Sitting at the tail end of our pretty little CU stack we get two ray accelerators a piece, and it’s the changes here that AMD seems particularly proud of.

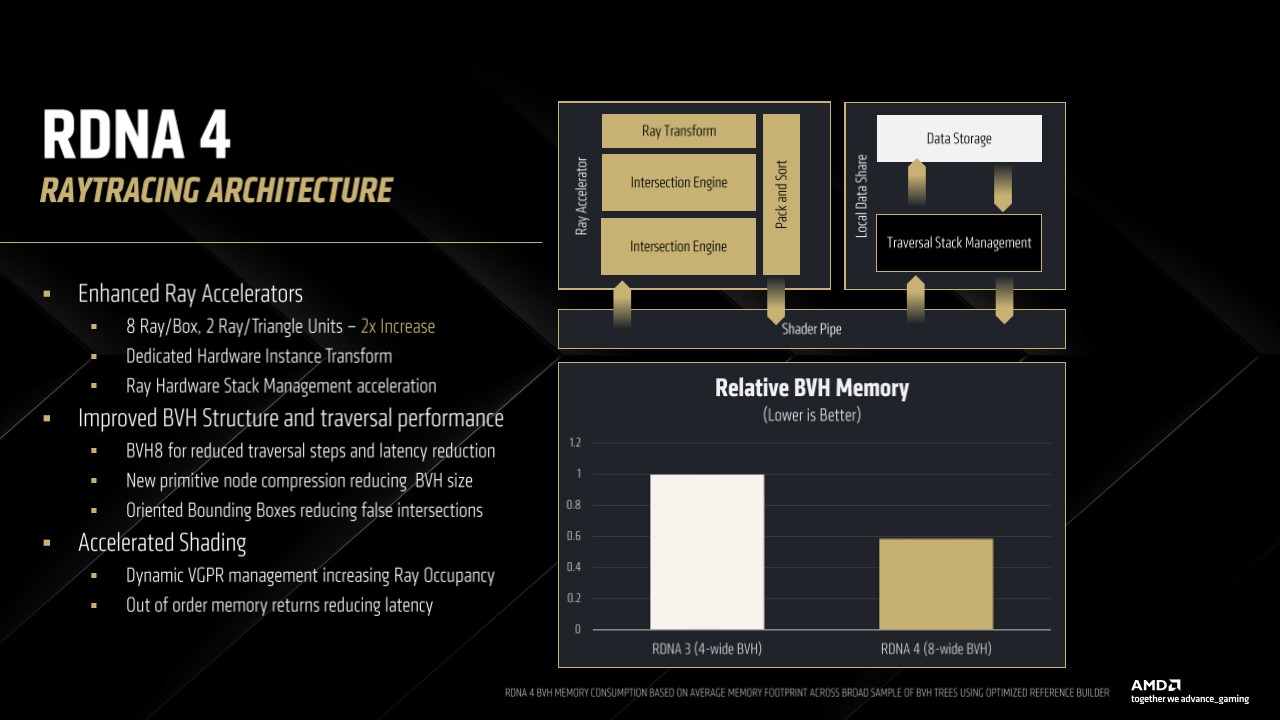

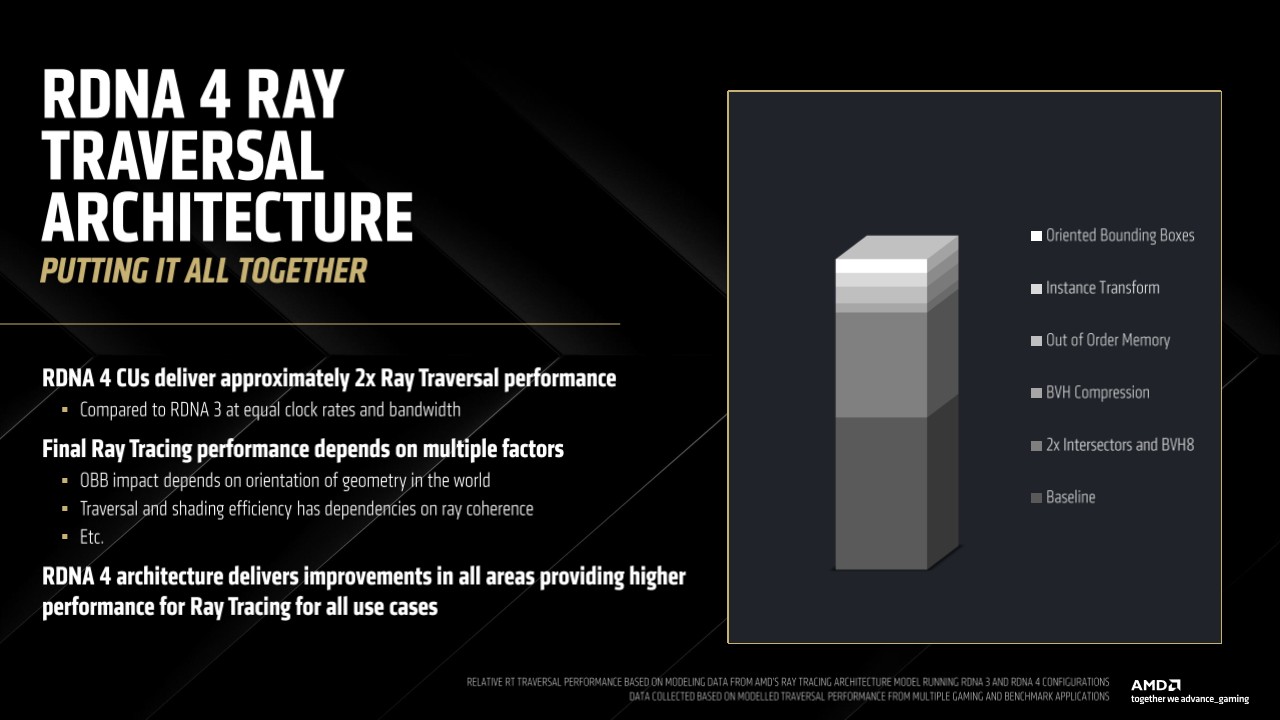

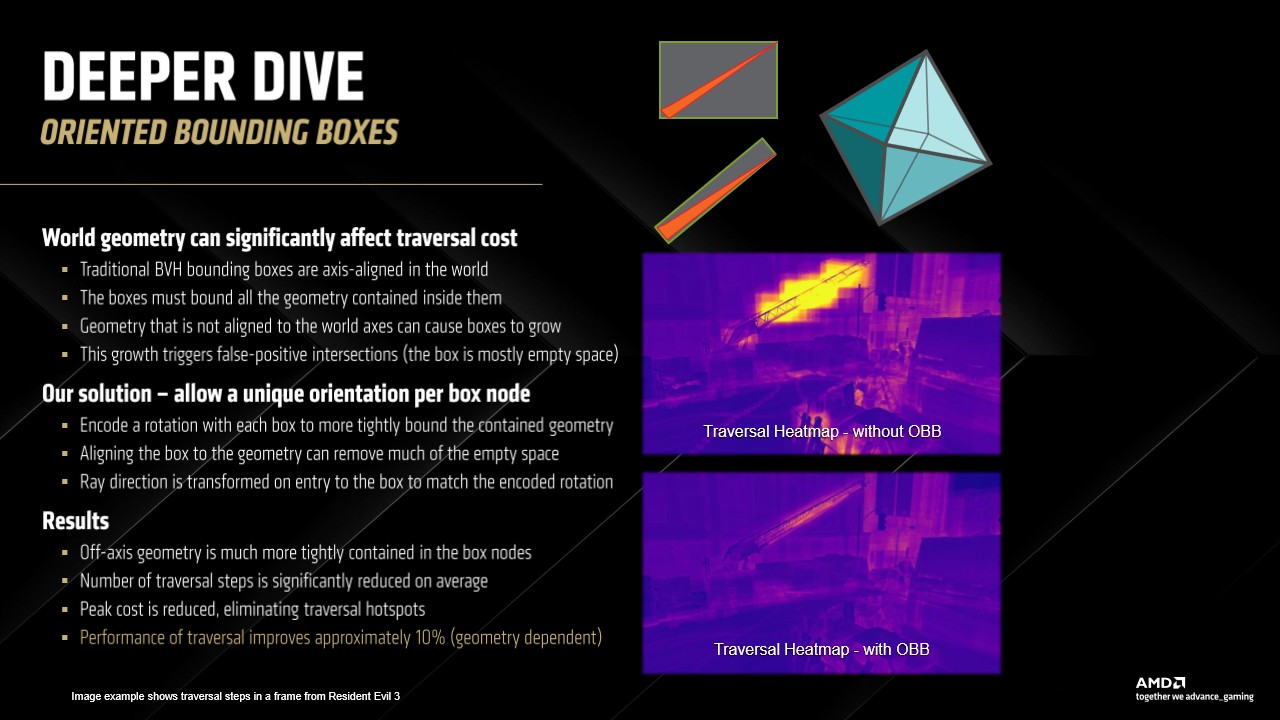

RDNA 3 cards were not known for their ray tracing performance, and AMD looks to have zeroed in on this deficit to bring a claimed 2x ray traversal performance uplift to RDNA 4. The third generation ray tracing accelerators use “Oriented Bounding Boxes” to reduce the size and complexity of Bounding Volume Hierarchy data, which it says delivers much more efficient ray traversal through geometry with a lower memory cost, making better use of the VRAM in the process.

The new RT accelerators now have a second intersection engine, which AMD says doubles the performance for both Ray/Box and Ray/Triangle testing, alongside a dedicated ray transform block, which is also said to increase the performance as rays are traversed.

While AMD is still performing the traversal of the BVH data via the CUs rather than a dedicated ASIC, each RT accelerator is beefier than the previous versions, which hopefully translates into ray tracing performance that stands a chance of competing with Nvidia’s efforts.

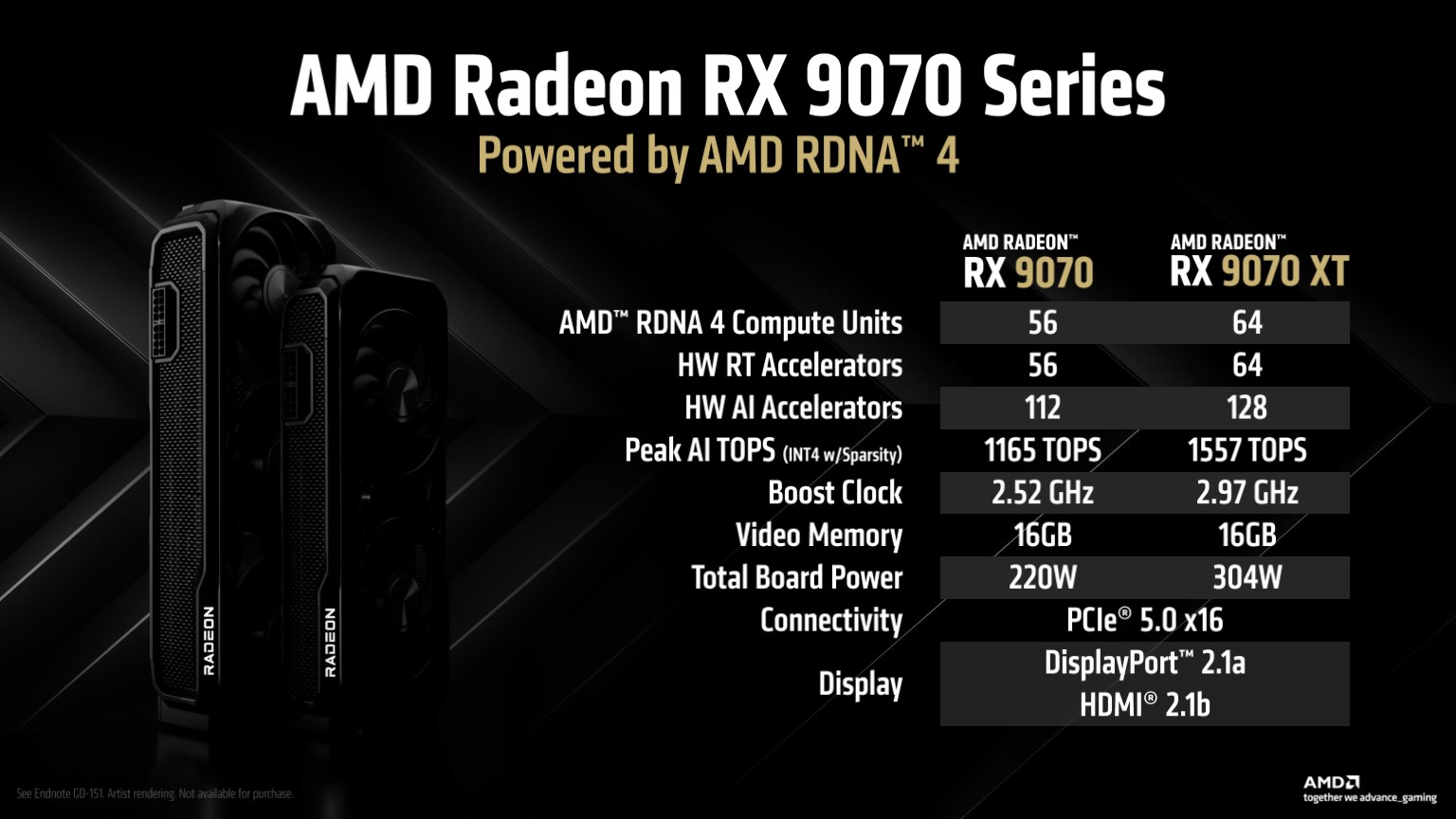

As a result of all this CU reinforcement, the new GPUs are claimed to deliver performance figures comparable to the high-end RX 7900-series cards, despite having a lower total CU count overall. The RX 9070 XT has 64 refreshed CUs total and the RX 9070 makes do with 56—compared to the 84 compute units you’d find in the RX 7900 XT, for example.

That means the RX 9070 XT ends up with 64 ray accelerators, 128 AI accelerators and 4096 stream processors, compared to the 56 ray accelerators, 112 AI accelerators and 3584 stream processors of the RX 9070 standard. Clock speeds are also much lower for the RX 9070 compared to its bigger brother, with the standard card hitting a boost clock of up to 2,520 MHz compared to the RX 9070 XT’s 2,970 MHz top whack.

Specifications

Part of me thinks the 70 MHz on the end of the XT’s boost clock is purely for branding reasons (it is the RX 9070-series, after all), but I’d be curious to see what sort of overclocking potential is left on the table here. Nvidia’s RTX 50-series GPUs have been reliable overclockers so far, and given all the moves towards CU efficiency with RDNA 4, part of me wonders whether there might be more left to give in the AMD cards, too. We’ll find out in due course, I guess.

Looking at the architecture overall, it’s otherwise a reasonably similar picture to what you’d find in RDNA 3. AMD says it has “optimised and balanced” the cache system, with 64 MB of third gen Infinity Cache, 8 MB of L2 cache (a 2 MB improvement over the 6 MB in RDNA 3) and 2 MB of aggregate CU cache on tap, governed by an improved command processor.

Both of the new cards will make use of 16 GB of GDDR6 20 Gbps VRAM a piece, over a 256-bit bus with an effective memory bandwidth of 640 GB/s. They also feature an enhanced media engine for improved encoding quality supporting up to 8K/60 fps streaming and recording via AV1.

When it comes to power usage, AMD says the RX 9070 XT has a TBP of 304 W with a recommended PSU wattage of 750 W, while the RX 9070 has a mere 220 W TBP with a 650 W power supply recommendation. Those are some impressively low power figures, particularly given the performance claims.

Performance

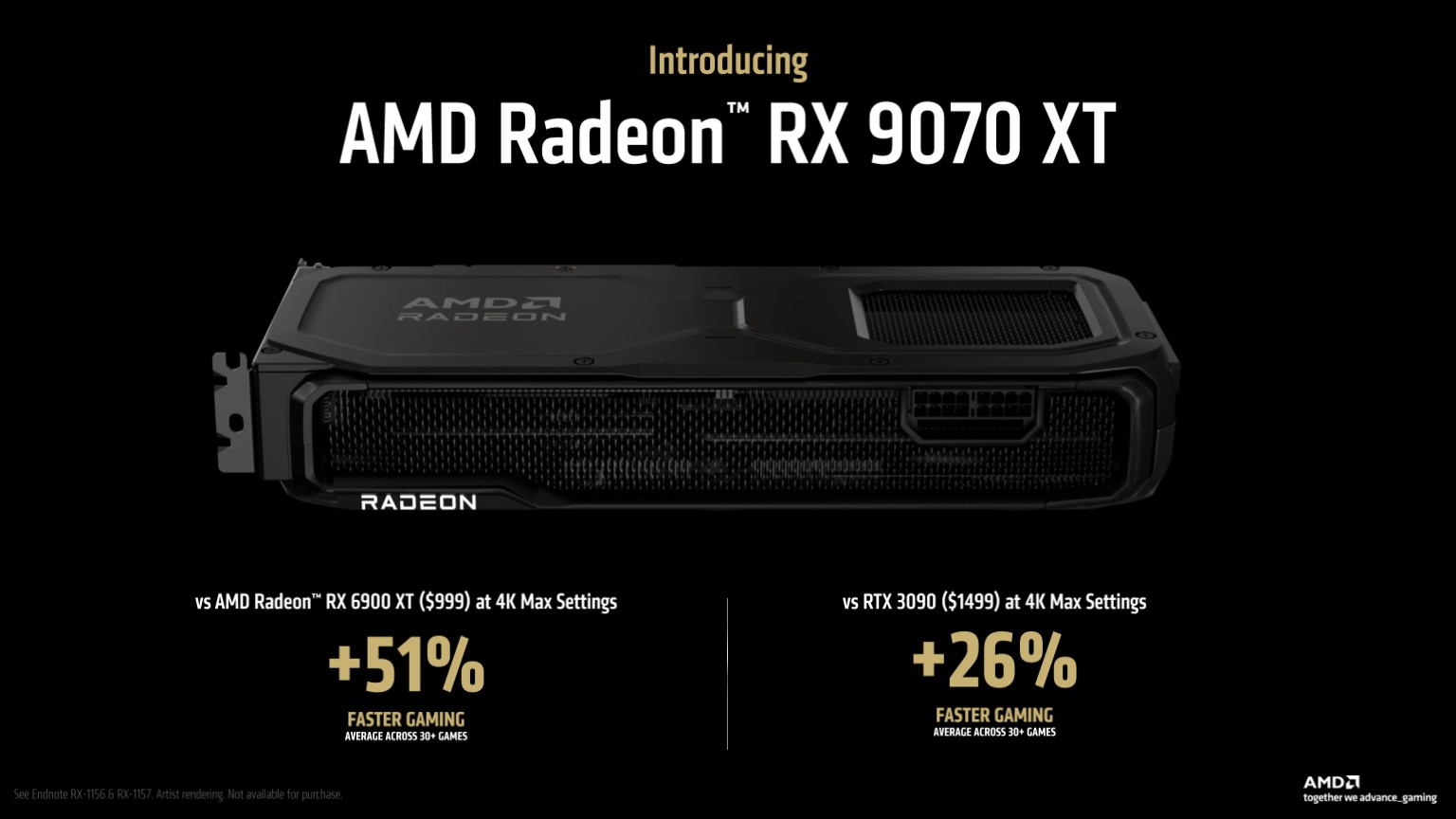

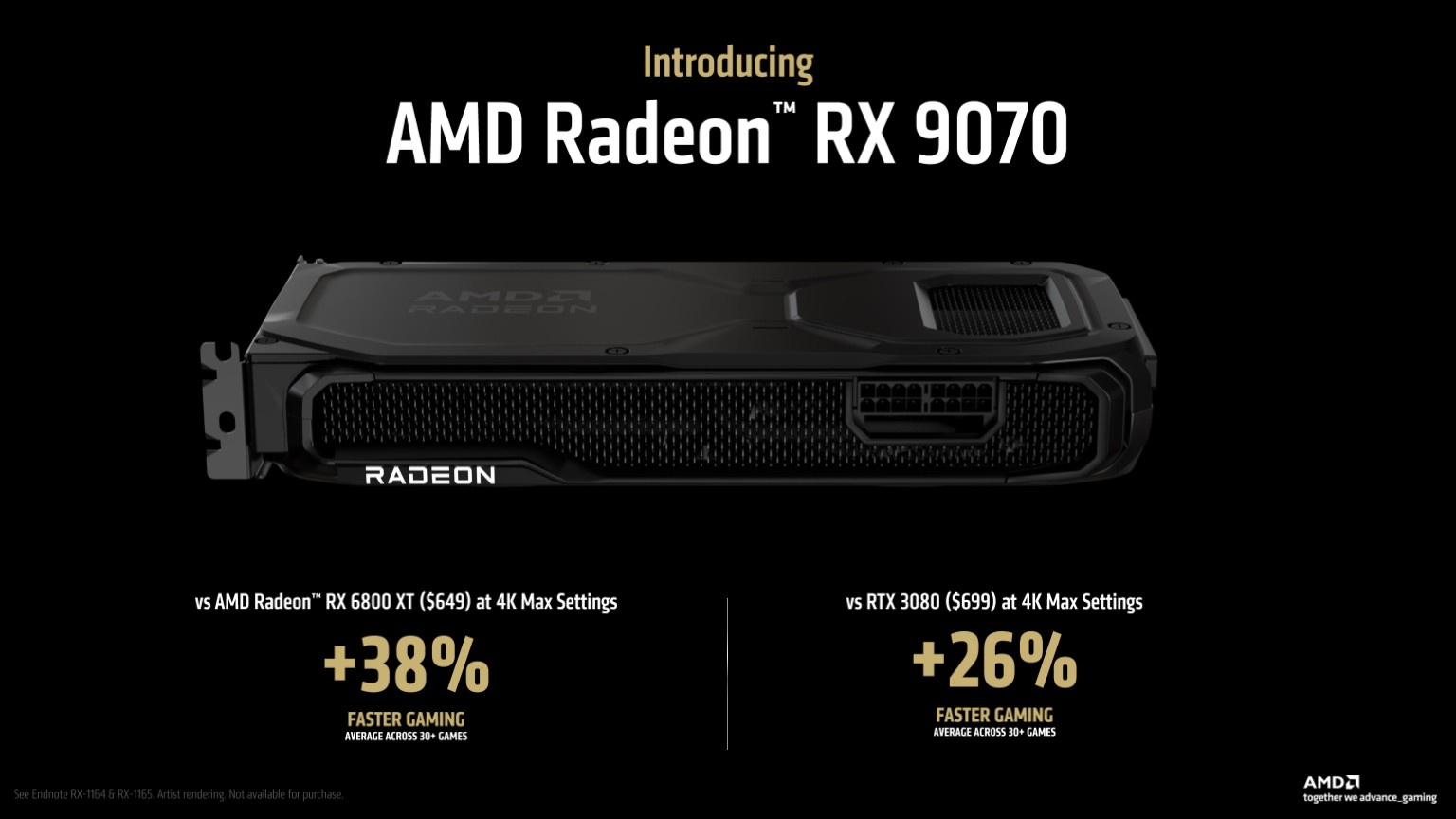

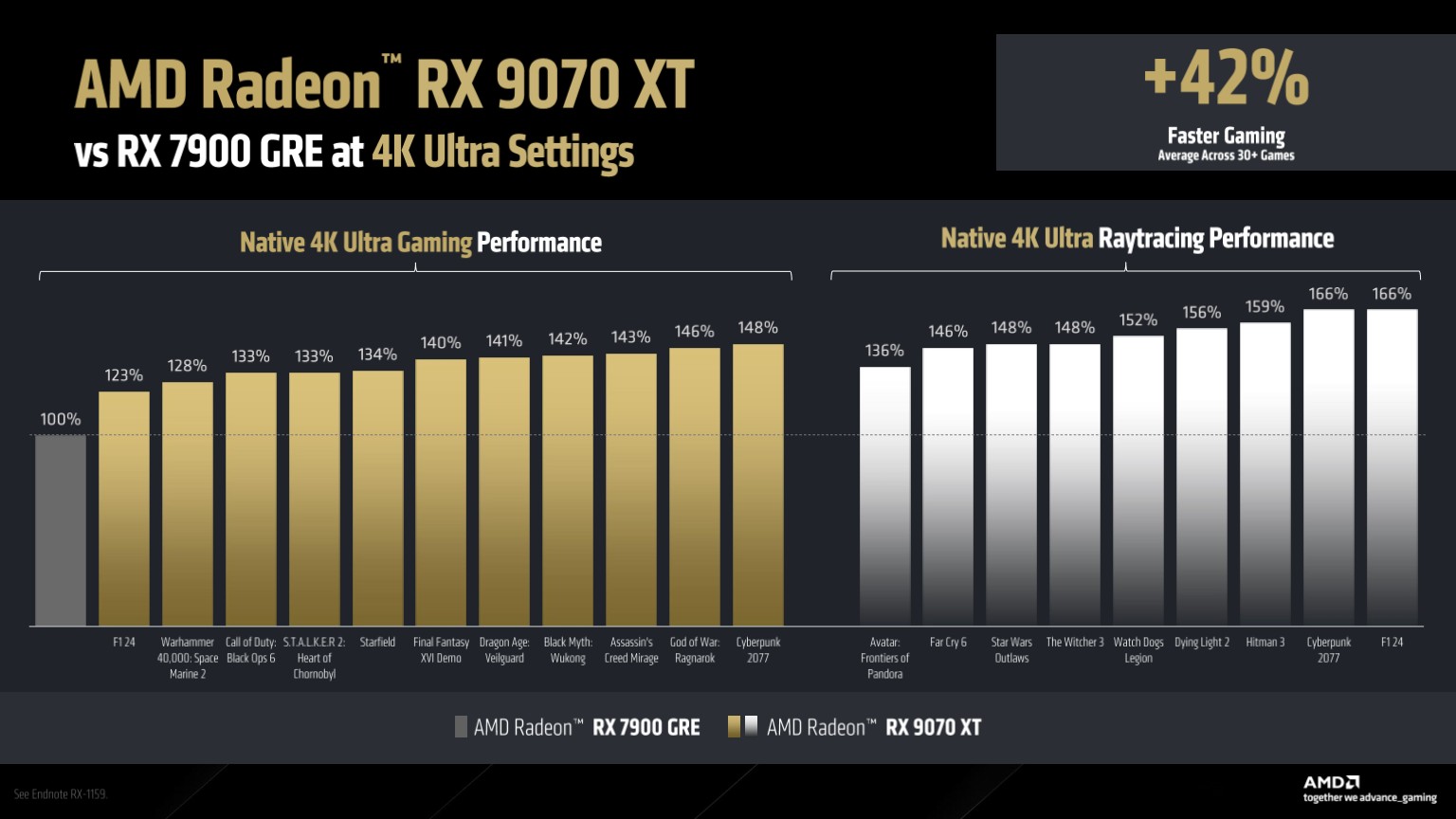

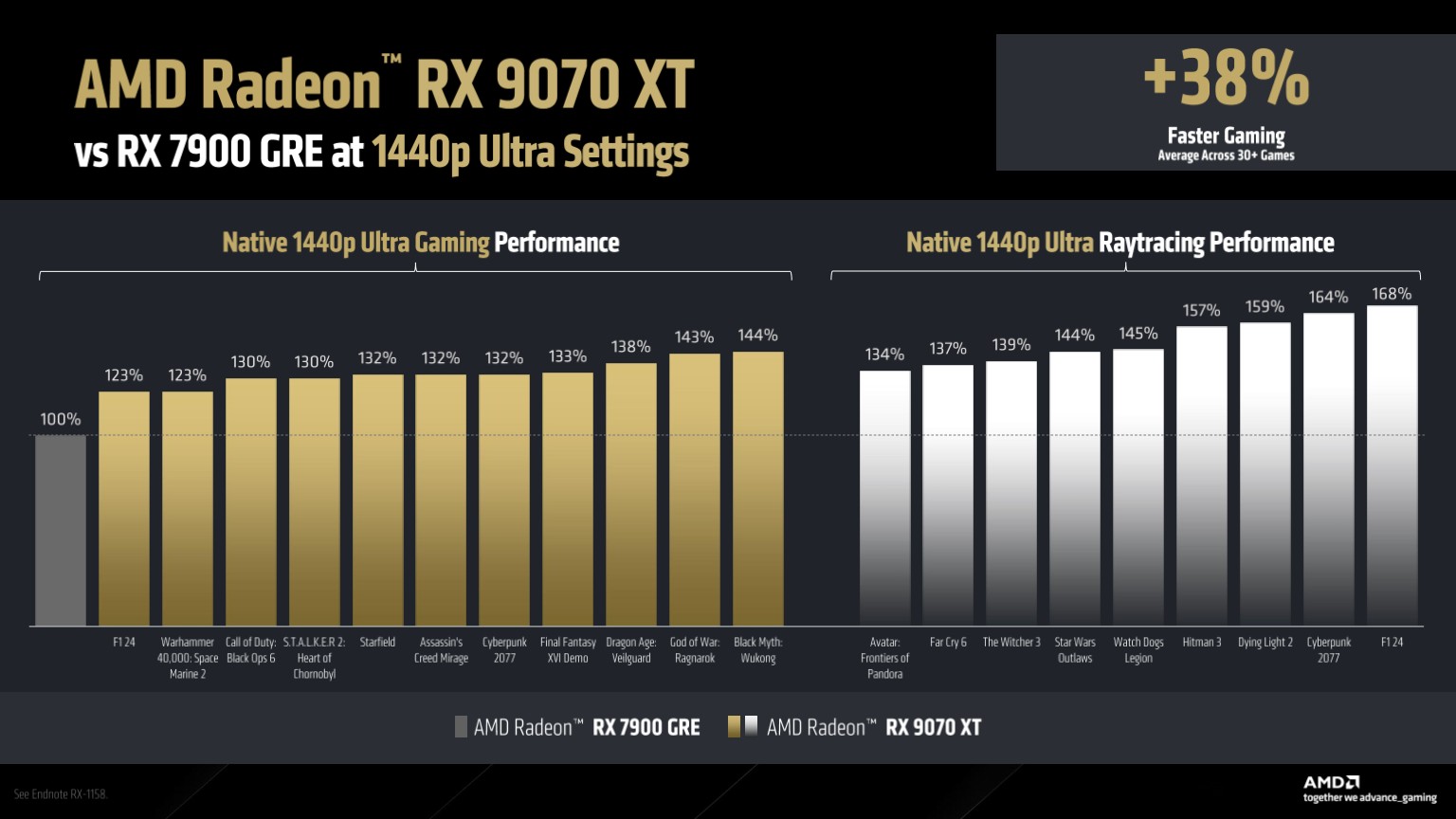

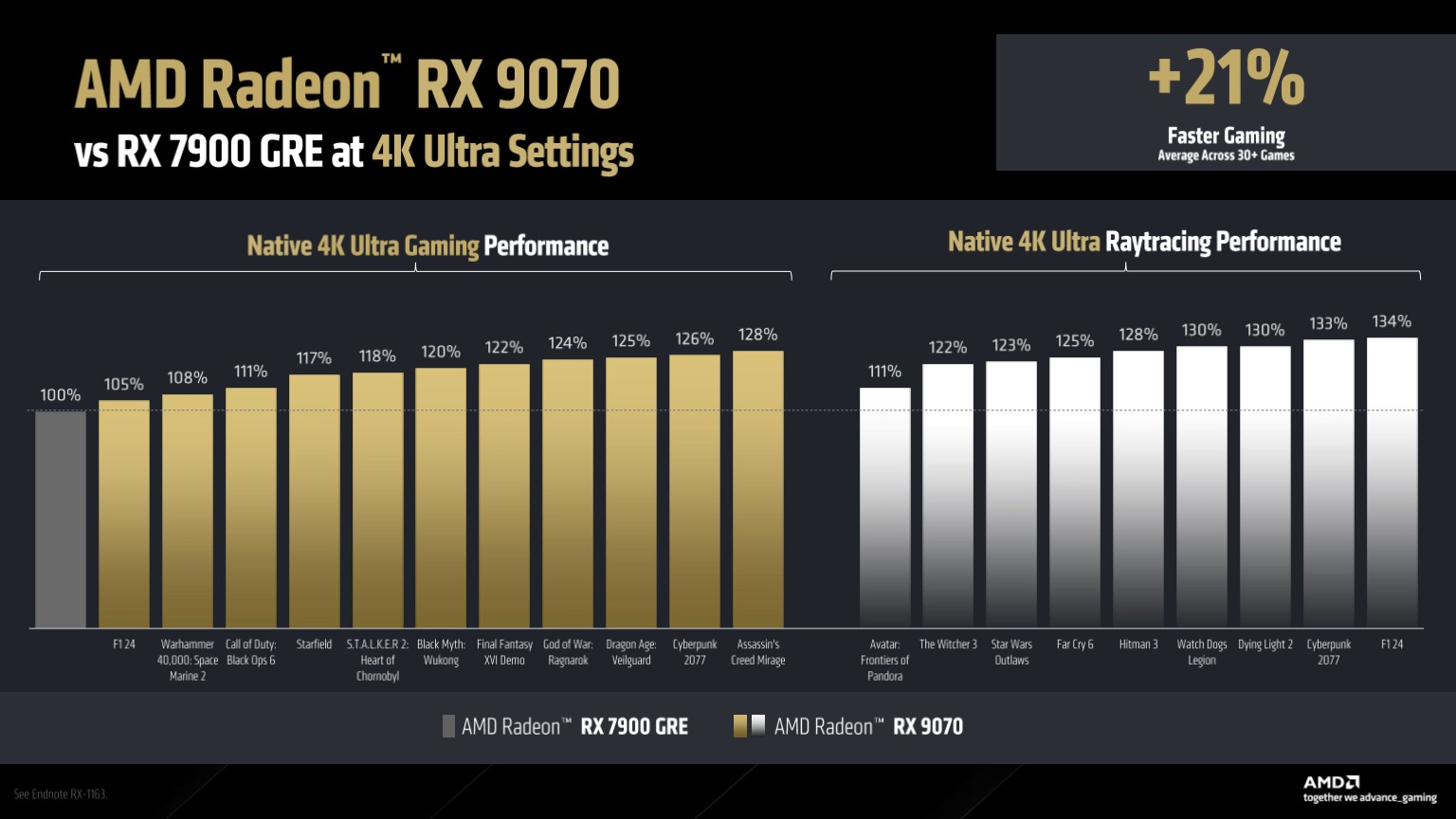

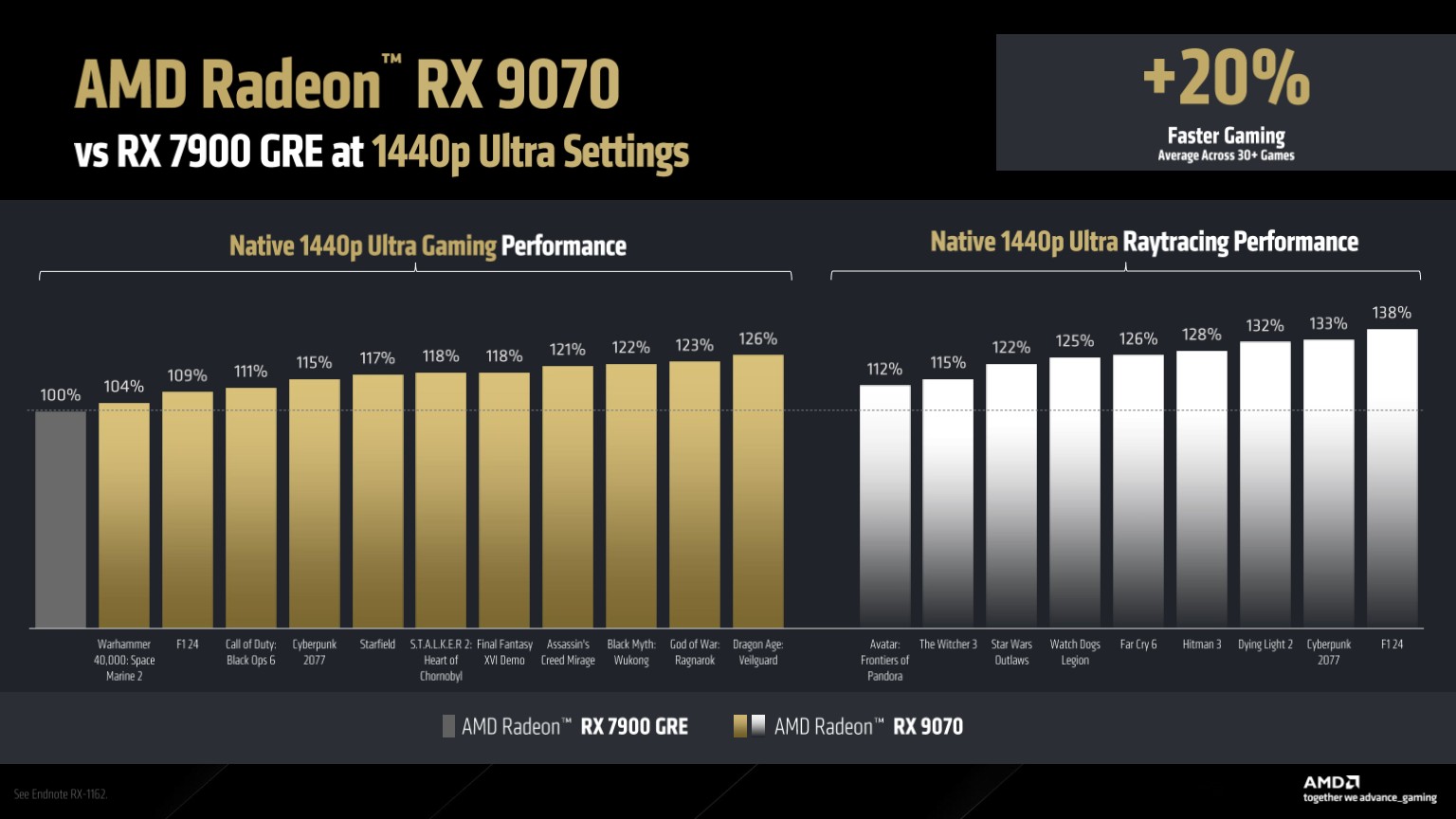

Speaking of which, AMD’s performance charts pit the RX 9070 XT against the RX 7900 GRE. That’s an upper mid-range card of the RDNA 3 generation, which gives some clues as to where AMD thinks the RX 9070 XT sits in the stack compared to its previous models.

It’s claimed that the RX 9070 XT delivers between 23% and 48% more performance than the RX 7900 GRE at 4K native Ultra settings in a variety of games, with Cyberpunk 2077 gaining the most frames. The 4K Ultra ray tracing performance comparison, however, shows F1 24 gaining 66% more performance in F1 24 alongside Cyberpunk compared to the older card, suggesting that those ray tracing architectural improvements really might translate into significant real world gains.

Still, it’s always best to treat figures like this as indicators, rather than cold hard data. It’s no surprise that the RX 9070 XT would show significant ray tracing improvements over the RX 7900 GRE as, to be honest, that card was never much cop with the ray tracing goodies enabled.

And AMD, like any manufacturer, is always going to present the data that shows off its GPUs at their very best. We’ll be putting both the RX 9070 and RX 9070 XT through their paces soon in our own independent testing, and that’s where we’ll get a better idea of the exact performance of the new cards.

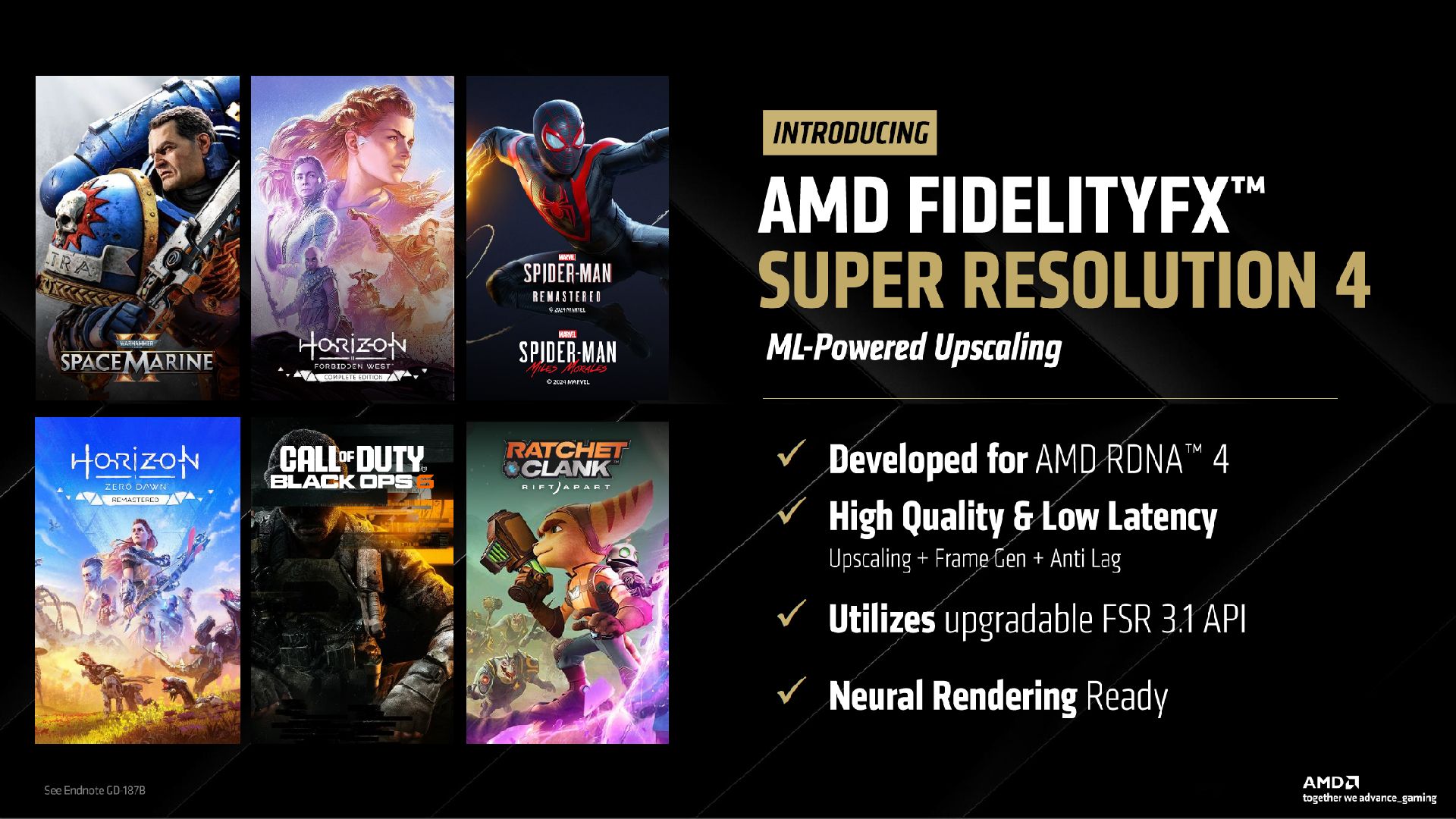

FSR 4

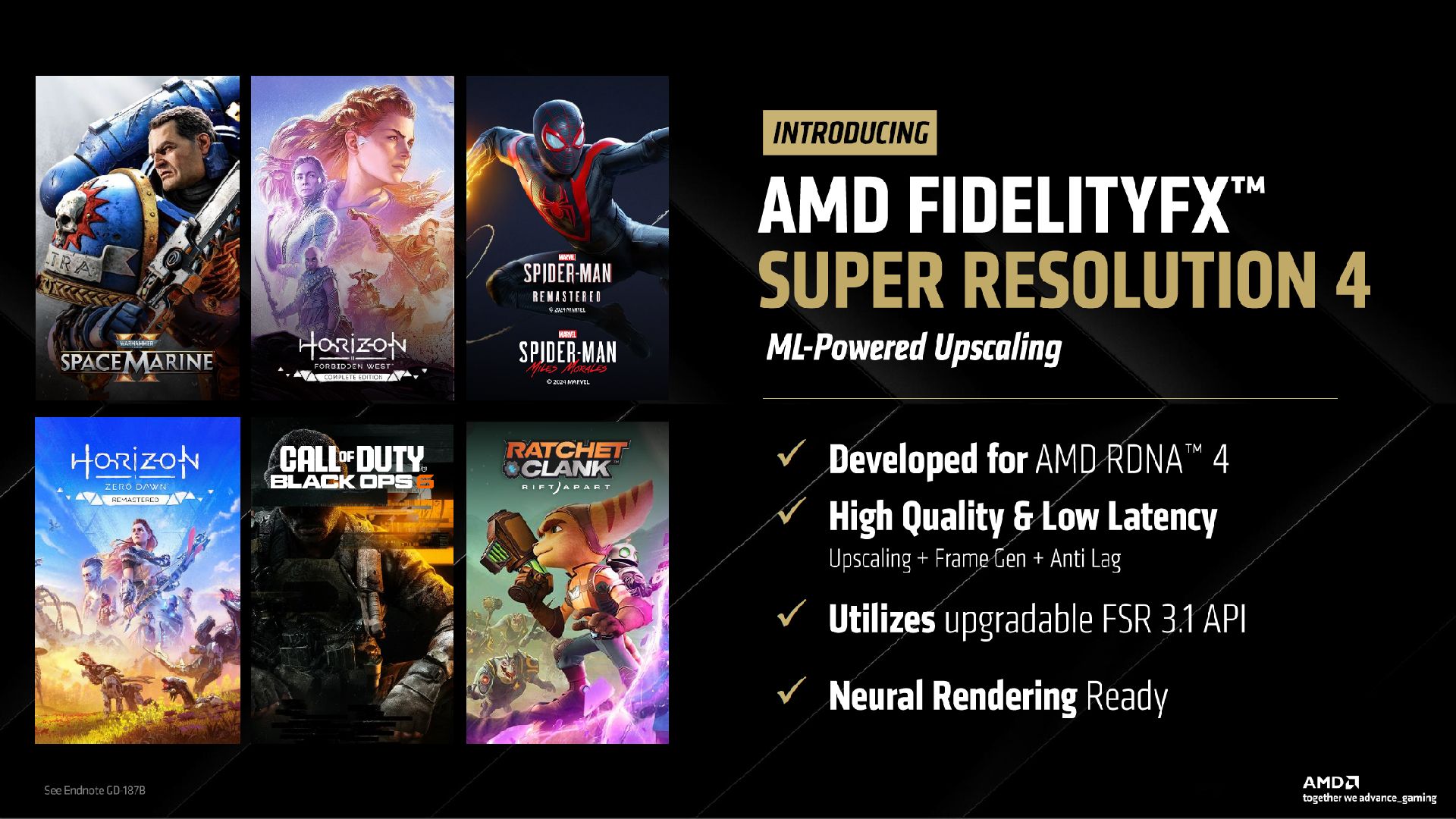

And I haven’t even mentioned FSR 4. Nvidia’s RTX 50-series cards lean heavily on DLSS 4 for massive performance gains, and AMD has often felt way behind the curve with its competing upscaling solution, FSR. Previously, FSR was a compute-based upscaler, but the new version finally throws machine learning into the mix thanks to those new AI accelerators on the RDNA 4 cards.

Matrix calculations on the RDNA 3 generation cards were handled by non-dedicated architecture on the CUs, whereas the dedicated AI accelerator matrix units fitted to the RX 9070-series have finally brought FSR into the machine learning realm.

So yes, that means FSR 4 is RX 9070-series dependent, and older AMD card users (like myself) don’t get to play. Still, the machine learning models have been trained on AMD’s EPYC and Instinct AI hardware, and the claimed image quality and performance boosts gained as a result look impressive in the screenshots so far.

Looking at the first image above, it’s clear as to where FSR 3.1 fails in the image-quality stakes. The tops of the spires in the distance show considerable artifacting and missing pixels, whereas FSR 4 appears to do a much better job at preserving image data from native, even at Performance settings.

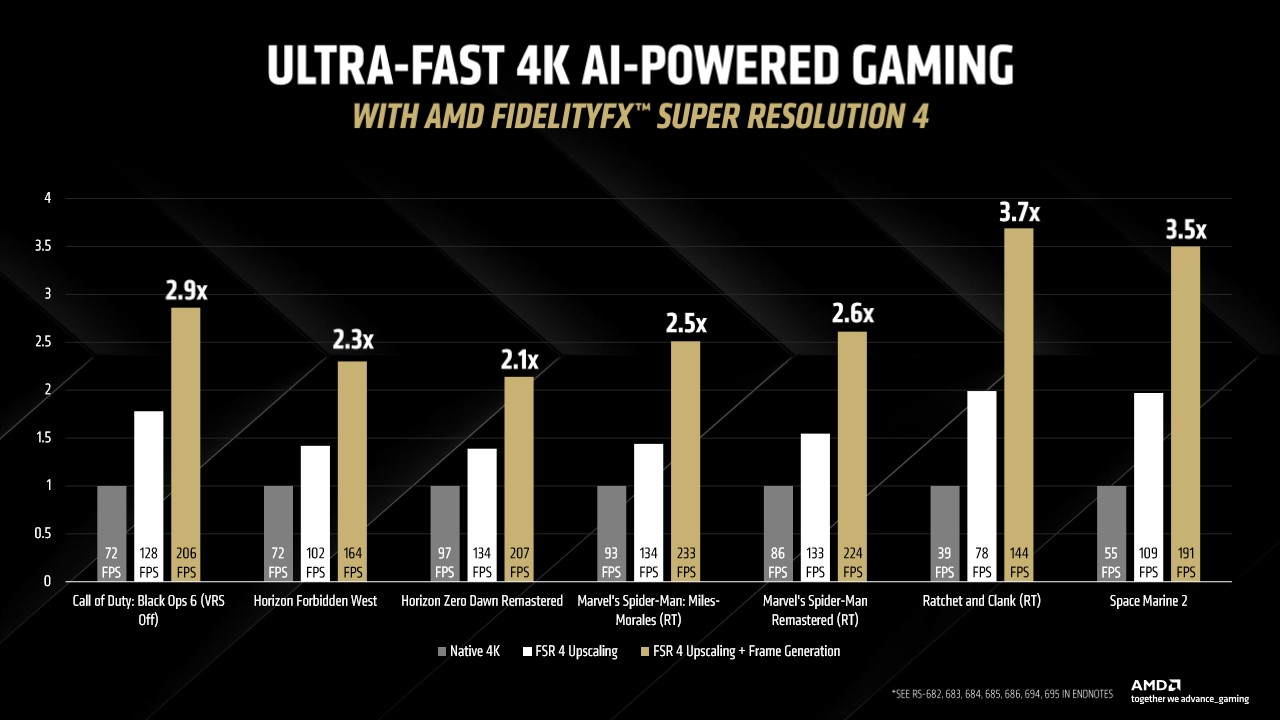

AMD is also claiming a 3.5x uplift in performance in Space Marine 2 at 4K with FSR 4 and frame generation enabled, with significant frame rate gains reported in Call of Duty: Black Ops 6, Ratchet and Clank, and Marvel’s Spider-Man: Miles Morales, among others.

AMD says that there’ll be 30+ games supported at launch, with 75+ coming in 2025 from a variety of developers. Fingers crossed this won’t be like FSR 3.1 which still suffers from limited support in many modern releases, although FSR 4 will apparently be a drop in upgrade for games using the FSR 3.1 API.

There’s also a new version of AMD Fluid Motion Frames on the block, AFMF 2.1. It’s claimed to deliver improved frame generation image quality with reduced ghosting and better temporal tracking, and will be supported by AMD RX 6000, 7000 and 9070-series cards alongside the iGPU in Ryzen AI 300 series processors.

So, plenty to get excited about here, especially that pricing. Many had assumed that AMD would aim for a $699 price point for the RX 9070 XT, but $599? That seems downright reasonable if the performance claims prove out.

AMD also claims “wide availability” come March 6, from AIBs such as Acer, ASRock, Asus, Gigabyte, Sapphire and more providing bountiful numbers of the new cards. Whether that proves out in practice remains to be seen, but given that it’s extremely difficult to get hold of an RTX 50-series card right now at anywhere close to its MSRP, that potentially bodes well for sales if stocks really are as plentiful as AMD says.

So, after months of speculation, we finally have some AMD GPU competition on the way. While RDNA 4 doesn’t look like a sea change when compared to RDNA 3, key improvements in ray tracing acceleration and AI improvements might just be what the doctor ordered, and I’ll be keen to see what those improvements translate to in real world performance when we test them for ourselves.

Roll on March 6, that’s what I say. The battle of the graphics cards begins once more.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.